Decoherence, noise and all things faulty - the science behind what makes quantum computers fail

What is decoherence? How does it occur and what effect does it have on quantum computers? Can we mitigate this phenomena?

Short Summary:

Decoherence is the process where a quantum system loses its ‘quantumness’ over time - becoming more and more classical.

Decoherence is caused by many things such as interactions with the environment, noise from sources as well as defects and impurities in materials.

We can mitigate decoherence with quantum error correction codes (QEC codes) and by adequately isolating a qubit system from the environment.

Decoherence reveals a fundamental obstacle in the way of ‘quantum advantage’, but over time quantum computers are being made to withstand this phenomena.

Decoherence refers to the process whereby quantum information of a system is lost to the environment, altering the quantum state. As the ‘quantumness’ of a system is lost, quantum computation becomes less effective and the advantage that quantum computation provides starts to evaporate. This means that properties such as superposition and entanglement start to dissipate, turning the system classical. Furthermore, this unwanted interaction with the external environment produces errors, making quantum algorithms unreliable and faulty. Therefore, it is the job of any quantum computing physicist to reduce the interaction of the quantum computer with the environment in order to maintain a high performance. If one can maintain the coherence of quantum operations, then the exponential speed-up and processing power of quantum computers can be used. It is important to mention that decoherence is different to collapsing a quantum state for measurement i.e: looking inside Schrödinger’s box. Decoherence is not measurement, it is a natural process that occurs procedurally over time, and it is the reason why macroscopic systems generally behave classically.

Things that cause Quantum Decoherence

Coupling to the environment is a primary cause of decoherence. Coupling refers to the process where a system forms a transfer or interaction with another. If a quantum system couples to an external environment, energy and information becomes lost to the environment as a result. For example, such interactions can occur through coupling to electrons, photons or other electromagnetic particles in the surrounding environment. The strength of this coupling is proportional to the rate of decoherence.

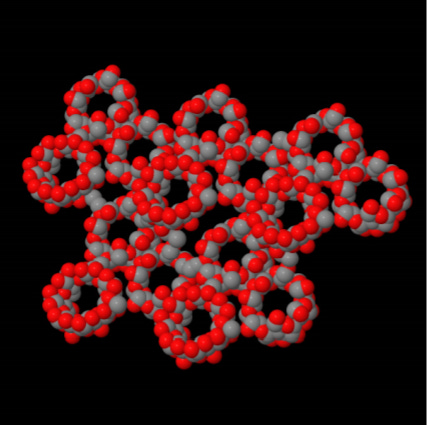

Another prevalent cause of decoherence is noise. Noise can be thought of as unwanted disruptions in the environment that affect quantum systems. Noise can arise from many sources such as thermal fluctuations, vibrations, charge and spin fluctuations or imperfections in the design of a quantum computer. What is interesting, therefore, is that errors and faults are not always caused externally. For quantum computers that utilise trapped ion qubits, decoherence can occur by the magnetic field fluctuations that take place inside the computer. Equally superconducting qubits are prone to error via defects and impurities in the material.

Gate fidelity and timing jitters can also cause quantum decoherence. Most quantum computing systems relay instructions to qubits in the form of control pulses (which are essentially precise lasers). If the timing of such pulses are off or if certain gates become faulty, this variability can cause qubits to deviate from their intended quantum state. These errors degrade the coherence of the quantum system.

A final mind-bending cause of decoherence is in fact measurement itself. That’s correct reader, even when you go to measure a property of a quantum system, a phenomena called ‘measurement backaction’ can influence the property. It is essentially the same as uncertainty - the act of measurement has an innate uncertainty which can change the quantity being measured. This means that there will always be a probabilistic uncertainty in quantum mechanics. Even at absolute zero temperature (where particles should be frozen in place) quantum particles slightly vibrate due to quantum fluctuations. This is unlike classical mechanics, which posits that the error of an experiment could be reduced to zero if all devices were perfect and the user had a perfect knowledge of the setup.

Effects of Quantum Decoherence on Quantum Computers

Quantum computers rely heavily on the fidelity of qubits and their corresponding quantum states. If quantum states are naturally decohering, this means that errors accumulate in quantum computers and wrong answers can output. Decoherence can destroy the superposition and entanglement of qubits - meaning that quantum computers essentially lose their biggest weapon. Quantum algorithms rely heavily on the ability to use a phenomena known as ‘interference’. Quantum interference is a phenomenon where quantum states, existing in superposition, interact with each other and either amplify or cancel out, significantly influencing the probability of specific measurement outcomes. Decoherence can absolutely destroy this effect, meaning that quantum computers are unable to arrive at correct answers.

Decoherence is the bane of quantum computation, without a doubt.

Ways to Prevent Quantum Decoherence

Quantum error correction is one of the most prevalent ways to deal with decoherence. These error codes work under the assumption that errors will occur in quantum systems, so they are tailored to actively correct them. These codes work by comparing multiple qubits within a system and recovering the correct state. Whilst they can fail if errors truly overload a computer, they generally work well and boost the amount of time a quantum computer remains stable. Quantum error correction codes do not measure the state of the qubits, which would cause the state to collapse, rather they measure ancillary qubits (side qubits) which are coupled to the data qubits of interest. This gives us information as to whether the qubit has accumulated an error without destroying the entire quantum state.

Isolation of a quantum system from the environment can help to prevent decoherence. Isolation is less of a method and more of a gold standard - every qubit system should be isolated to an extent. By enhancing this physical isolation with vacuum chambers, electromagnetic shielding or cooling to low temperatures, it is possible to diminish the rate of decoherence.

Material design can be a huge player in mitigating errors. Microsoft recently designed a quantum processor powered by topological qubits. Topological qubits have a built-in defence against errors due to the inherent design of the material. On the most fundamental of scales it is important for quantum computing physicists to design computers that are inherently robust against errors. This will allow systems to scale without the worry of error, and for quantum algorithms to run effectively.

Concluding Thoughts

It is vital that decoherence can be understood and analytically characterised. Decoherence reveals a deeper understanding about the quantum world and the boundary to the classical world. Decoherence is the reason we observe classical behaviour on the macroscopic scale, rather than quantum phenomena. This validates the co-existence of classical and quantum physics. The goal for any quantum computing physicist is to be able to accurately know what types of decoherence are occurring in their quantum system, in order to effectively mitigate them. In a world where quantum decoherence is greatly minimised, we will see the advent of powerful quantum algorithms capable of surpassing classical computation. Problems that once took numerous years to solve could now be cracked in mere seconds. Whilst the perfect quantum computer doesn’t exist - a quantum computer that can maintain a quantum state for long enough can change the landscape of computation. Dare I say it could change the world.